Local and Global Flatness for Federated Domain Generalization

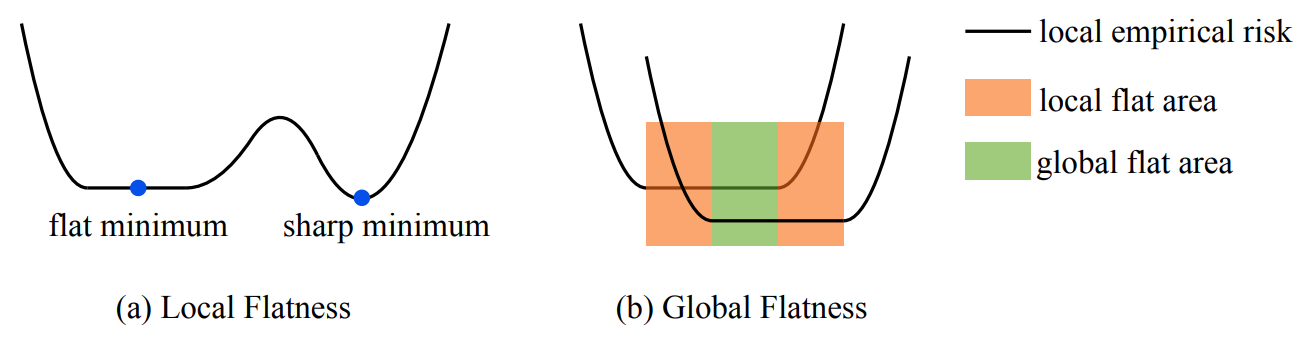

Federated learning aims to train a unified model using isolated data distributed across multiple clients. However, traditional federated learning settings assume identical data distributions for both training and testing sets, neglecting the demand for the model’s cross-domain generalization ability when such assumption does not hold. Federated domain generalization seeks to develop a model that is capable of generalizing to unseen testing domains based on data isolated on multiple clients and sampled from multiple training domains. Challenges within this problem stem from both the lack of access to data from unseen testing domains and the absence of data from multiple training domains in each client. To address this, we propose a simple federated domain generalization method that seeks both local and global flatness. The proposed local flatness regularization prevents the model from converging to sharp minima, while the global flatness regularization encourages movement toward the global optimum. Both flatness regularizations rely on adversarial parameter perturbation, with two perturbation methods proposed at the level of weights and singular values. Experimental results demonstrate that our proposed methods achieve state-of-the-art performance on standard federated domain generalization benchmarks. The code is publicly available at https://github.com/cnyanhao/FedLGF.

Published in ECCV 2024

Recommended citation: Hao Yan, Yuhong Guo. Local and Global Flatness for Federated Domain Generalization. ECCV 2024.

Download paper here